Read a SQL Server transaction log

SQL Server transaction logs contain records describing changes made to a database. They store enough information to recover the database to a specific point in time, to replay or undo a change. But, how to see what’s in them, find a specific transaction, see what has happened and revert the changes such as recovering accidentally deleted recordsTo see what is stored in an online transaction log, or a transaction log backup is not so simple

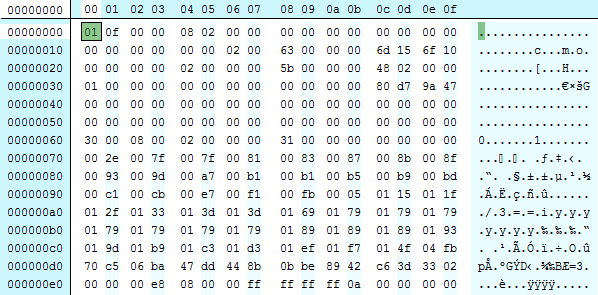

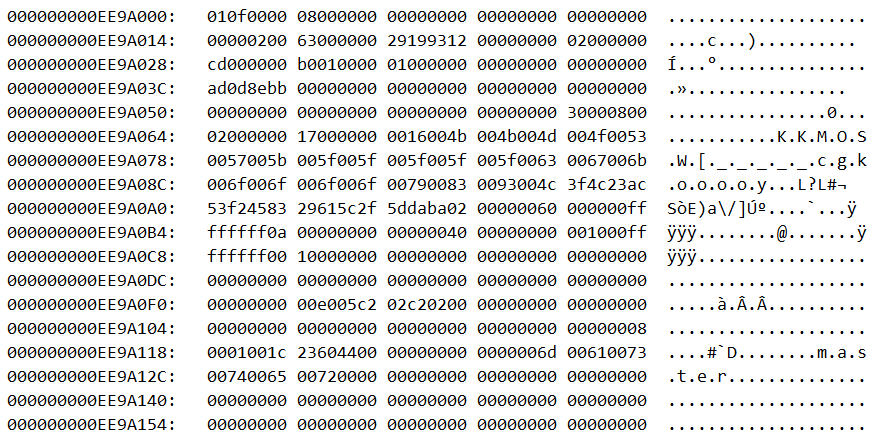

Opening LDF and TRN files in a binary editor shows unintelligible records so these clearly cannot be read directly. For instance, this in an excerpt from an LDF file:

Use fn_dblog

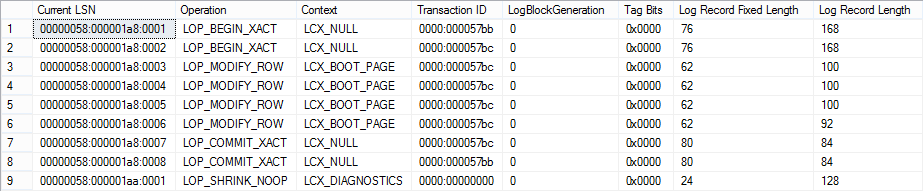

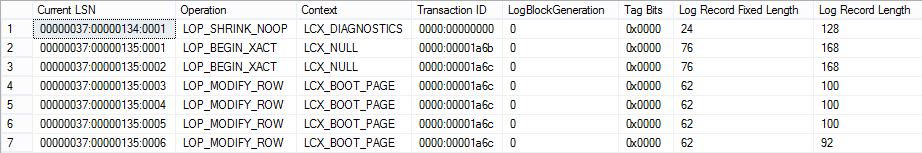

fn_dblog is an undocumented SQL Server function that reads the active portion of an online transaction logLet’s look at the steps you have to take and the way the results are presented

- Run the fn_dblog function

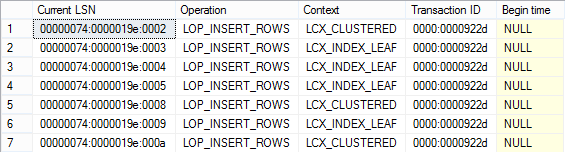

- From the results set returned by fn_dblog, find the transactions you want to see To see transactions for inserted rows, run:

- Find the column that stores the value inserted or deleted – check out the RowLog Contents 0 , RowLog Contents 1 , RowLog Contents 2 , RowLog Contents 3 , RowLog Contents 4, Description and Log Record Row data is stored in different columns for different operation types. To be able to see exactly what you need using the fn_dblog function, you have to know the column content for each transaction type. As there’s no official documentation for this function, this is not so easy

- Convert binary data into table data taking into account the table column data type. Note that mechanisms for conversion are different for different data types

Select * FROM sys.fn_dblog(NULL,NULL)

As the function itself returns 129 columns, returning only the specific ones is recommended as well as narrowing down the results to a specific transaction type, if applicable

SELECT [Current LSN], Operation, Context, [Transaction ID], [Begin time] FROM sys.fn_dblog (NULL, NULL) WHERE operation IN ('LOP_INSERT_ROWS');

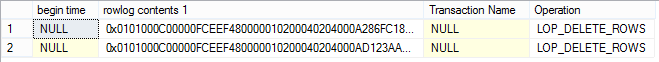

To see transactions for deleted records, run:

SELECT [begin time], [rowlog contents 1], [Transaction Name], Operation FROM sys.fn_dblog (NULL, NULL) WHERE operation IN ('LOP_DELETE_ROWS');

The inserted and deleted rows are displayed in hexadecimal values. To be able to break them into fields you have to know the format that is used, understand the status bits, know the total number of columns and so on

As the UPDATE operation is minimally logged in transaction logs, with no old or new values, just what was changed for the record (e.g. SQL Server may log that “G” was changed to “F”, when actually the value “GLOAT” was changed into ”FLOAT”), you have to manually reconstruct the state prior to the update which involves reconstructing all the intermediary states between row’s original insertion into page and the update you are trying to reconstruct

When deleting BLOBs, the deleted BLOB is not inserted into a transaction log, so just reading the log record for the DELETE BLOB cannot bring the BLOB back. Only if there is an INSERT log record for the deleted BLOB, and you manage to pair these two, you will be able to recover a deleted BLOB from a transaction log using fn_dblog

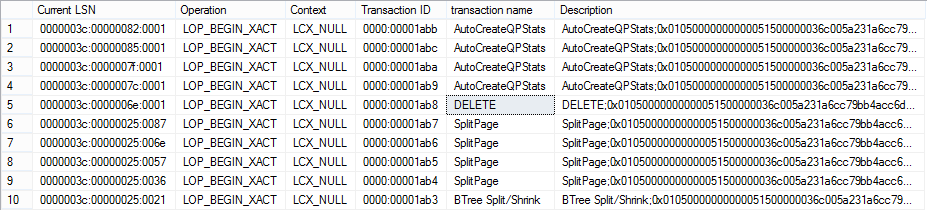

Use fn_dump_dblog

To read transaction log native or natively compressed backups, even without the online database, use the fn_dump_dblog function. Again, this function is undocumented- Run the fn_dump_dblog function on a specific transaction log backup. Note that you have to specify all 63 parameters

- Determine the LSN for this transaction

- Convert the LSN into the format used in the WITH STOPBEFOREMARK = ‘<mark_name>’ clause, e.g 00000070:00000011:0001 should be transformed into 112000000001700001

- Restore the full log backup chain until you reach the time when the transactions occurred. Use the WITH STOPBEFOREMARK = ‘<mark_name>’ clause to specify the referencing transaction LSN

RESTORE LOG AdventureWorks2012 FROM DISK = N'E:\ApexSQL\backups\AW2012_05232013.trn' WITH STOPBEFOREMARK = 'lsn:112000000001700001', NORECOVERY;

SELECT * FROM fn_dump_dblog (NULL,NULL,N'DISK',1,N'E:\ApexSQL\backups\AdventureWorks2012_05222013.trn', DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT, DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT, DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT, DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT, DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT, DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT, DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT, DEFAULT);

The same as with fn_dbLog, 129 columns are returned, so returning only the specific ones is recommended

SELECT [Current LSN], Operation, Context, [Transaction ID], [transaction name], Description FROM fn_dump_dblog (NULL,NULL,N'DISK',1,N'E:\ApexSQL\backups\AdventureWorks2012_05222013.trn', DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT, DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT, DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT, DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT, DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT, DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT, DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT,DEFAULT, DEFAULT);

And you are back to square one as with using fn_dblog – you need to reconstruct all row values manually, you need to reconstruct entire state chains for UPDATE operations and BLOB values and so on

If you don’t want to actually extract transactions from the transaction log backup, but to restore the database to a point in time before a specific operation occurred, you can:

USE DBCC PAGE

Another useful, but again undocumented command is DBCC PAGE. Use it to read the content of database online files – MDF and LDF. The syntax is:DBCC PAGE ( {'dbname' | dbid}, filenum, pagenum [, printopt={0|1|2|3} ])

SELECT FILE_ID ('AdventureWorks2012_Log') AS 'File ID' -- to determine Log file ID = 2 DBCC PAGE (AdventureWorks2012, 2, 0, 2)

DBCC execution completed. If DBCC printed error messages, contact your system administrator.

DBCC TRACEON (3604, -1)

DBCC PAGE (AdventureWorks2012, 2, 0, 2)

Which is not the friendliest presentation of your database data and is basically no different than viewing it in a hex editor (just more uncomfortable) though at least you get access to the online data

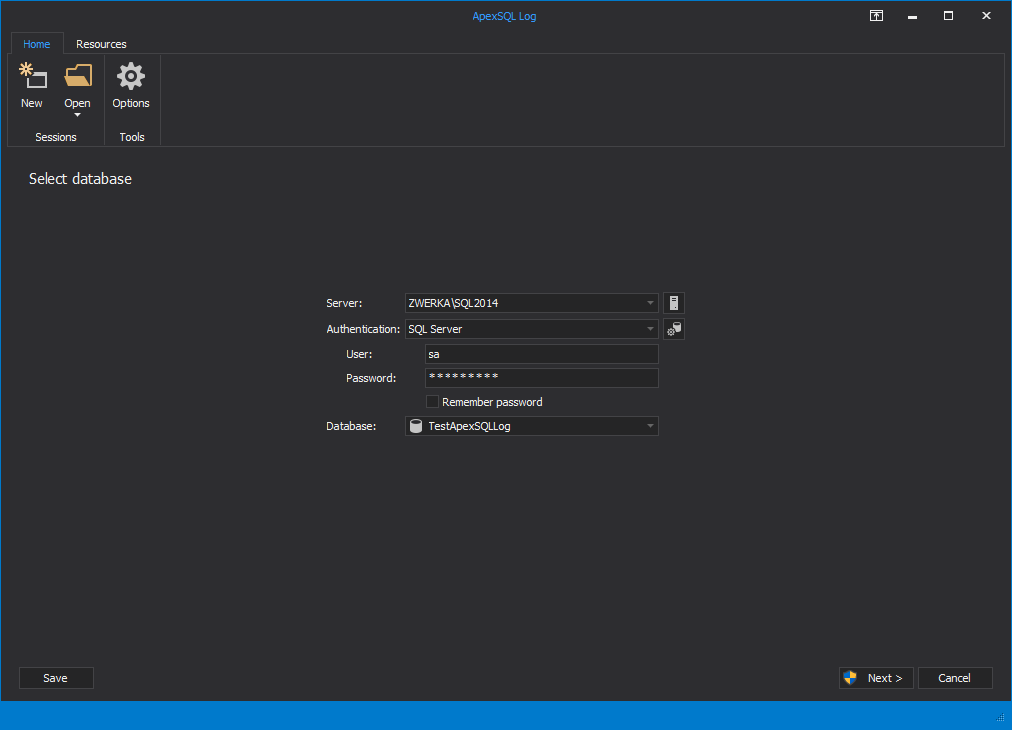

Use ApexSQL Log

ApexSQL Log is a sql server transaction log reader which reads online transaction logs, detached transaction logs and transaction log backups – both native and natively compressed. When needed, it will also read database backups to get enough data for a successful reconstruction. It can replay data and object changes that have affected a database, including those that had occurred before it was installed. Unlike the undocumented and unsupported functions described above, you’ll get perfectly understandable information about what happened, on which object, and what the old and the new value were- Start ApexSQL Log

- Connect to the database for which you want to read the transaction logs

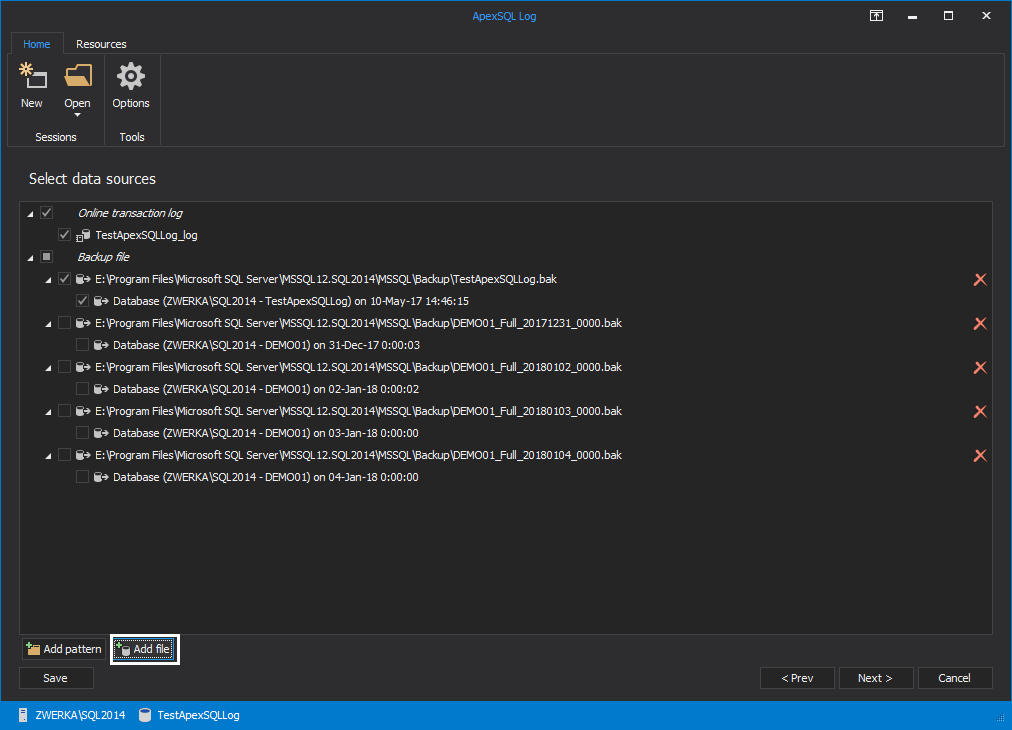

- In the Select data sources step, select the logs you want to read. Make sure they form a full chain. To add transaction log backups and detached LDF files, use the Add button

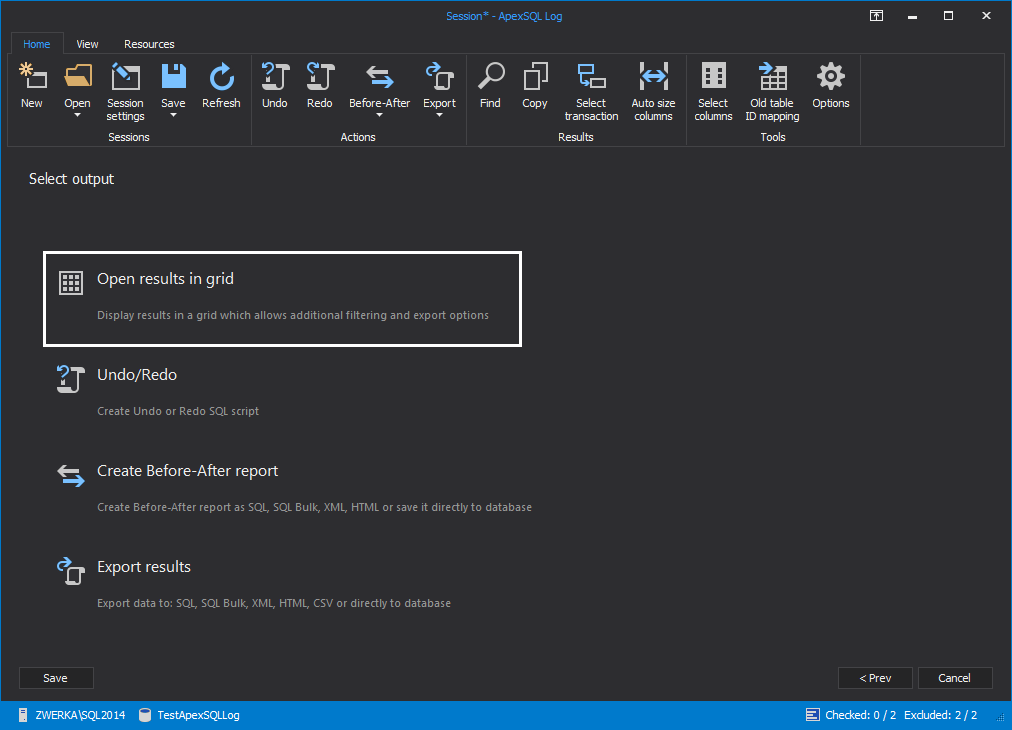

- In the next step, chose the output type – for the analysis purposes, select Open results in grid – this will load the audited data in the grid which is preferable output for investigation and analysis tasks.

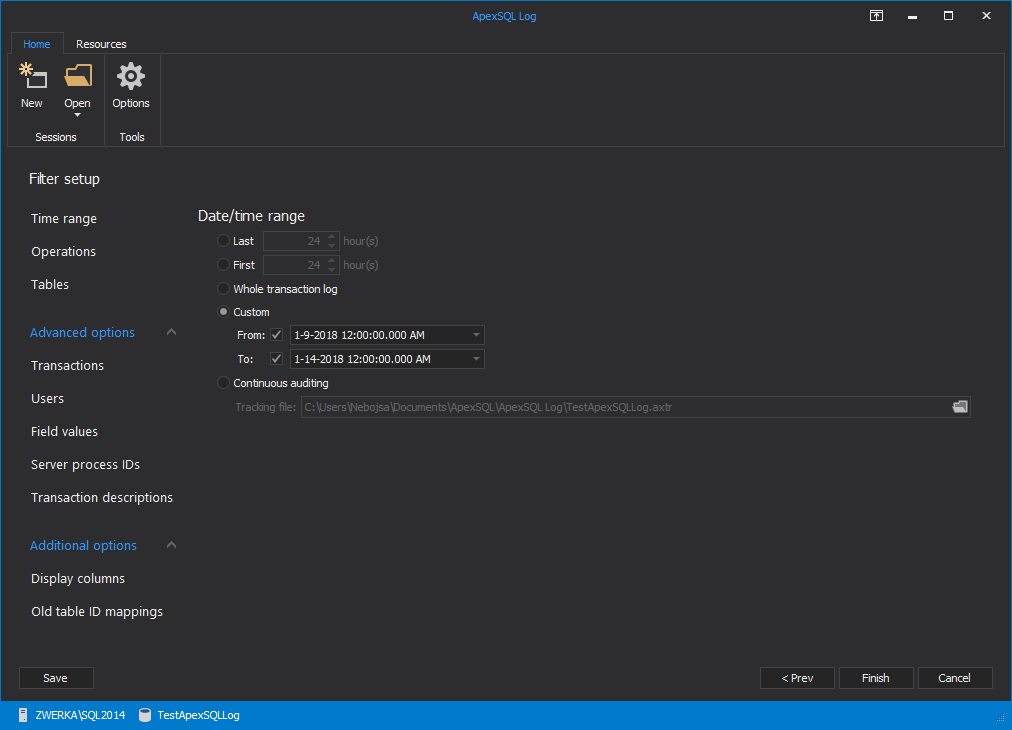

- Next, use the Filter setup options to narrow down the transactions read using the time range, operation type, table and other available filters

- Click Finish

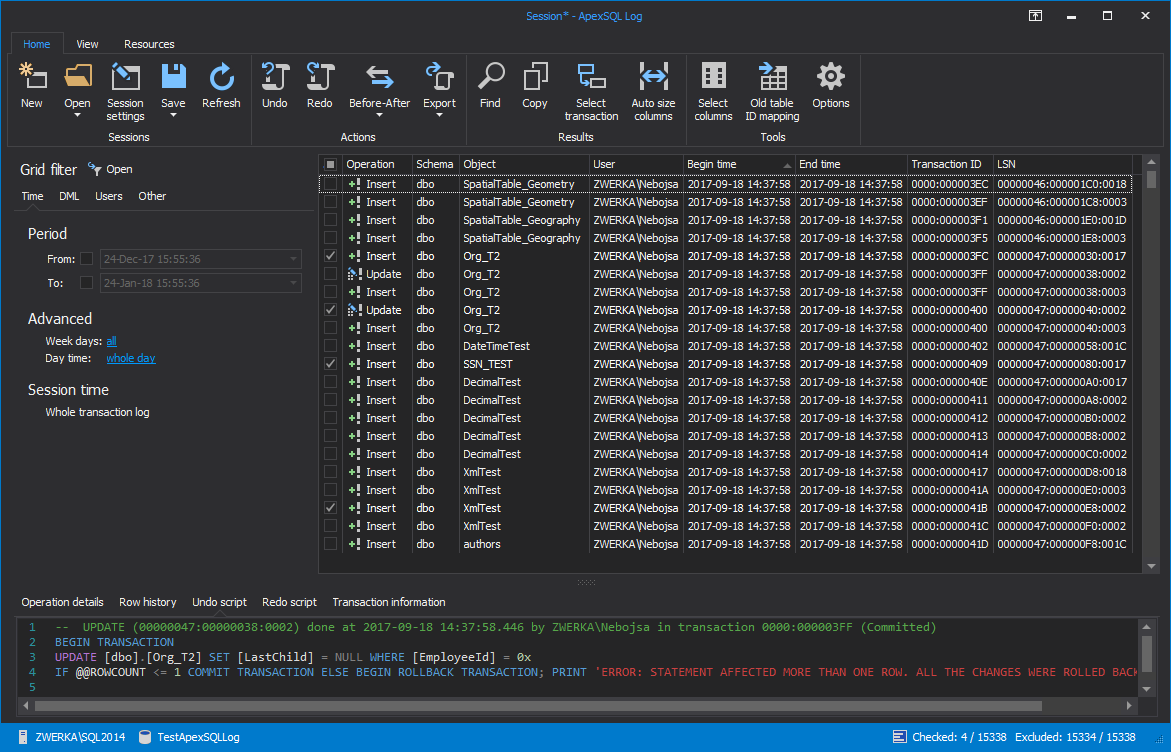

Fully comprehensive results will be shown in the ApexSQL Log grid

You will be able to see the time the operation began and ended, the operation type, the schema and object name of the object affected, the name of the user who executed the operation, the computer and application used to execute the operation. For UPDATEs, you’ll see the old and the new value of the updated fields

Downloads

Please download the script(s) associated with this article on our GitHub repository.Please contact us for any problems or questions with the scripts.

No comments:

Post a Comment