RAID 10 is not the same as RAID 01.

This article explains the difference between the two with a simple diagram.

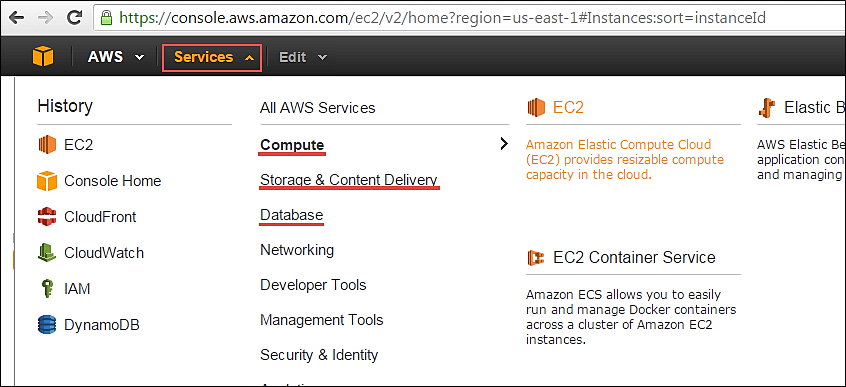

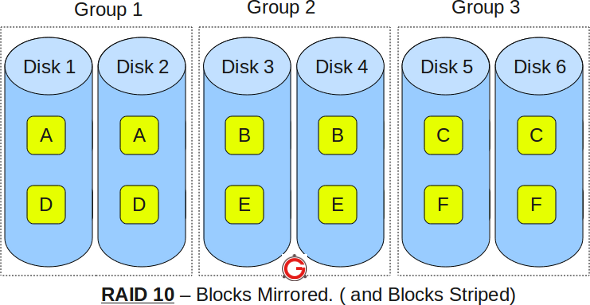

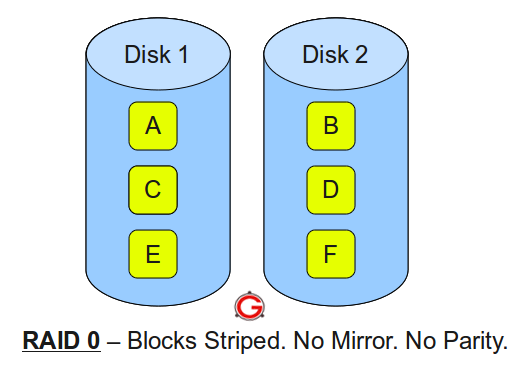

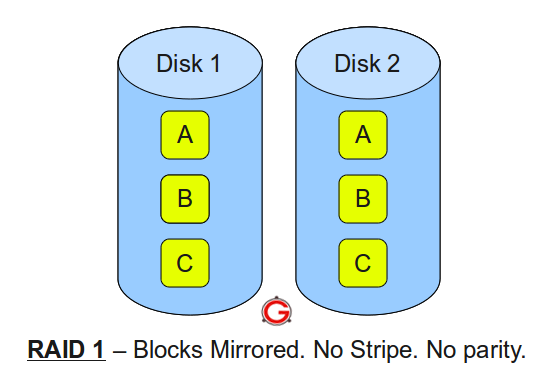

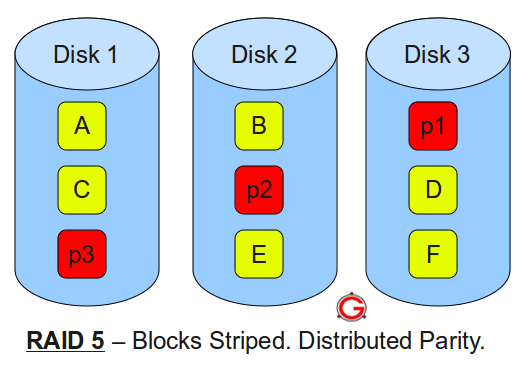

I’m going to keep this explanation very simple for you to understand the basic concepts well. In the following diagrams A, B, C, D, E and F represents blocks.

RAID 10

- RAID 10 is also called as RAID 1+0

- It is also called as “stripe of mirrors”

- It requires minimum of 4 disks

- To understand this better, group the disks in pair of two (for mirror). For example, if you have a total of 6 disks in RAID 10, there will be three groups–Group 1, Group 2, Group 3 as shown in the above diagram.

- Within the group, the data is mirrored. In the above example, Disk 1 and Disk 2 belongs to Group 1. The data on Disk 1 will be exactly same as the data on Disk 2. So, block A written on Disk 1 will be mirroed on Disk 2. Block B written on Disk 3 will be mirrored on Disk 4.

- Across the group, the data is striped. i.e Block A is written to Group 1, Block B is written to Group 2, Block C is written to Group 3.

- This is why it is called “stripe of mirrors”. i.e the disks within the group are mirrored. But, the groups themselves are striped.

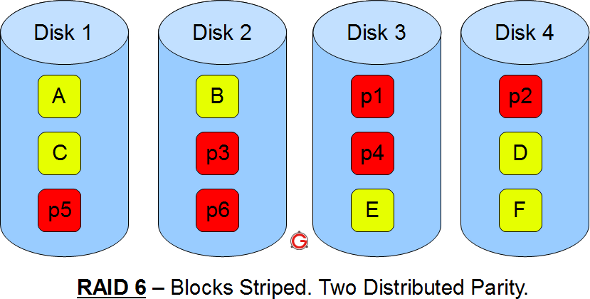

If you are new to this, make sure you understand how RAID 0, RAID 1 and RAID 5 and RAID 2, RAID 3, RAID 4, RAID 6 works.

RAID 01

- RAID 01 is also called as RAID 0+1

- It is also called as “mirror of stripes”

- It requires minimum of 3 disks. But in most cases this will be implemented as minimum of 4 disks.

- To understand this better, create two groups. For example, if you have total of 6 disks, create two groups with 3 disks each as shown below. In the above example, Group 1 has 3 disks and Group 2 has 3 disks.

- Within the group, the data is striped. i.e In the Group 1 which contains three disks, the 1st block will be written to 1st disk, 2nd block to 2nd disk, and the 3rd block to 3rd disk. So, block A is written to Disk 1, block B to Disk 2, block C to Disk 3.

- Across the group, the data is mirrored. i.e The Group 1 and Group 2 will look exactly the same. i.e Disk 1 is mirrored to Disk 4, Disk 2 to Disk 5, Disk 3 to Disk 6.

- This is why it is called “mirror of stripes”. i.e the disks within the groups are striped. But, the groups are mirrored.

Main difference between RAID 10 vs RAID 01

- Performance on both RAID 10 and RAID 01 will be the same.

- The storage capacity on these will be the same.

- The main difference is the fault tolerance level. On most implememntations of RAID controllers, RAID 01 fault tolerance is less. On RAID 01, since we have only two groups of RAID 0, if two drives (one in each group) fails, the entire RAID 01 will fail. In the above RAID 01 diagram, if Disk 1 and Disk 4 fails, both the groups will be down. So, the whole RAID 01 will fail.

- RAID 10 fault tolerance is more. On RAID 10, since there are many groups (as the individual group is only two disks), even if three disks fails (one in each group), the RAID 10 is still functional. In the above RAID 10 example, even if Disk 1, Disk 3, Disk 5 fails, the RAID 10 will still be functional.

- So, given a choice between RAID 10 and RAID 01, always choose RAID 10.

Following are the key points to remember for RAID level 1.

Following are the key points to remember for RAID level 1.

Following are the key points to remember for RAID level 10.

Following are the key points to remember for RAID level 10.