How to Move Oracle VM OCFS2 Repositories Between Server Pools

The ability to quickly move Oracle VM OCFS2 reporitories between server pools (source and target) is an essential Oracle VM lifecycle operation.

Prerequisites:

- You may have to wipe the Oracle VM Manager repository database to be able to successfully complete this operation. If you have never successfully wiped your Oracle VM Manager repository database, and re-discovered resources, BEFORE moving forward confirm that you can indeed successfully wipe the Oracle VM Manager repository database, and recover all the resources.

- Before starting the process, the OCFS2 reporitories that will be moved from the source to target server pool should not be mounted by "any" Oracle VM Servers.

- The OCFS2 repositories should only be zoned and masked to the target Oracle VM Servers.

1) This step is optional/informational. This step is only done on one dom0 to confirm cluster IDs. Once we have the correct cluster ID, for a sanity check, we list the old repositories cluster ID.

a) from only one dom0, as root, confirm the target pools cluster ID:

# o2cluster -o /dev/mapper/<the poolfs>

Write down the cluster ID from the new pool.

b) from only one dom0, as root, confirm the previous pool's cluster ID (the repositories will have the previous pool's cluster ID):

# o2cluster -o /dev/mapper/<wwid of existing repo1>

# o2cluster -o /dev/mapper/<wwid of existing repo2>

# o2cluster -o /dev/mapper/<wwid of existing repo3>

2) from only one dom0, as root, fsck.ocfs2 each repository (select Y, that's it!):

# fsck.ocfs2 /dev/mapper/UUID

fsck.ocfs2 1.8.2

[RECOVER_CLUSTER_INFO] The running cluster is using the o2cb stack

with the cluster name ce53582811c386e9, but the filesystem is configured for

the o2cb stack with the cluster name 76e9713d2092bfe6. Thus, fsck.ocfs2 cannot

determine whether the filesystem is in use or not. This utility can

reconfigure the filesystem to use the currently running cluster configuration.

DANGER: YOU MUST BE ABSOLUTELY SURE THAT NO OTHER NODE IS USING THIS

FILESYSTEM BEFORE MODIFYING ITS CLUSTER CONFIGURATION.

Recover cluster configuration information the running cluster? <n> y

Checking OCFS2 filesystem in /dev/mapper/36000d31000394200000000000000004e:

Label: OVS40ce40cbe9d1e

UUID: 0004FB000005000092940CE40CBE9D1E

Number of blocks: 402653184

Block size: 4096

Number of clusters: 1572864

Cluster size: 1048576

Number of slots: 32

/dev/mapper/36000d31000394200000000000000004e wasn't cleanly unmounted by all nodes. Attempting to replay the journals for nodes that didn't unmount cleanly

Checking each slot's journal.

Replaying slot 0's journal.

Slot 0's journal replayed successfully.

/dev/mapper/36000d31000394200000000000000004e is clean. It will be checked after 20 additional mounts.

Slot 0's journal dirty flag removed

Before step 3, confirm if the cluster ID has been reset:

# o2cluster -o /dev/mapper/<wwid of existing repo1>

# o2cluster -o /dev/mapper/<wwid of existing repo2>

# o2cluster -o /dev/mapper/<wwid of existing repo3>

If the correct cluster ID has been set, skip step 3.

3) To set the new pool's cluster ID on the previous repositories, as root, from only one dom0, type the following command for each repo (this will set the correct cluster ID):

# tunefs.ocfs2 --update-cluster-stack /dev/mapper/<wwid from each LUN>

4) Next, on only one of the dom0s, mount each of the repositories and change/confirm that the Oracle VM Manager UUID is correct. You can list the target Oracle VM Manager UUID in the /dev/mapper/<the poolfs>/.poolfs file, i.e. # cat /dev/mapper/<the poolfs>/.poolfs

as well as the Oracle VM Manager GUI, Help => About.

OVS_REPO_MGR_UUID=CORRECT_UUID

Save the change.

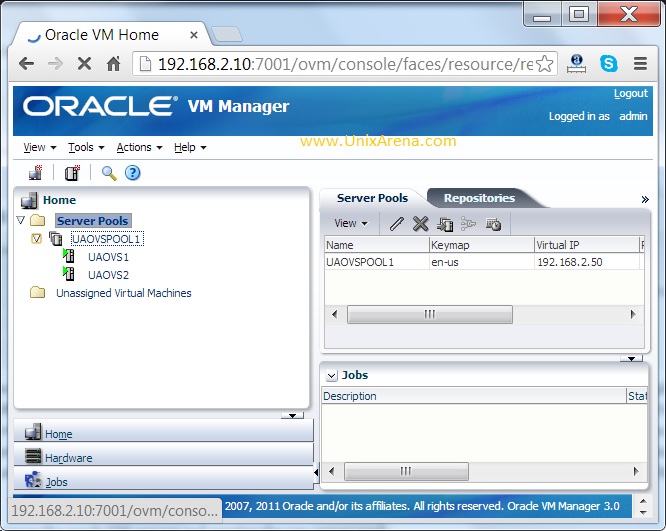

5) From the Oracle VM Manager, access the Storage Tab --> Shared File Systems --> Refresh (right click each repository, you can select any server, click "refresh")

6) Next, access the Repositories Tab -> Present each the Pool, confirm that all of the hosts have been added. Next, refresh each repository.

If you receive a message saying there is a mismatch between the repo and pool ID, wipe Oracle VM Manager database repository and rediscover all the objects. The MySQL DB must be wiped to remove bad repository entries. The user friendly names will also need to be restored after the resources have been re-discovered.

7) The VMs will now all be in the Unassigned Virtual Machines directory. Move to servers and start each VM

How to wipe the Oracle VM Manager MYSQL DB and rediscover all resources:

1) Oracle VM Manager host: Reset the Oracle VM Manager Database Repository. As root, access the Oracle VM Manager host, and drop the Oracle VM Manager Database Repository:

MySQL:

# /u01/app/oracle/ovm-manager-3/bin/ovm_upgrade.sh --deletedb --dbhost=localhost --dbtype=MySQL --dbport=49500 --dbsid=ovs --dbuser=ovs --dbpass=PASSWORD

Note: Substitute PASSWORD with the admin password.

SE or EE Database:

# /u01/app/oracle/ovm-manager-3/bin/ovm_upgrade.sh --dbhost=localhost --dbport=1521 --dbsid=MYSID --dbuser=ovs --dbpass=PASSWORD --deletedb

Note: Substitute localhost with the hostname, i.e. localhost or the host name of the DB server, MYSID with the Database SID, PASSWORD with the Database SYS password.

2) Oracle VM Manager Hosts: As root, access the Oracle VM Manager host, and stop and start the ovmm service:

MySQL:

# /u01/app/oracle/ovm-manager-3/bin/ovm_upgrade.sh --deletedb --dbhost=localhost --dbtype=MySQL --dbport=49500 --dbsid=ovs --dbuser=ovs --dbpass=PASSWORD

Note: Substitute PASSWORD with the admin password.

SE or EE Database:

# /u01/app/oracle/ovm-manager-3/bin/ovm_upgrade.sh --dbhost=localhost --dbport=1521 --dbsid=MYSID --dbuser=ovs --dbpass=PASSWORD --deletedb

Note: Substitute localhost with the hostname, i.e. localhost or the host name of the DB server, MYSID with the Database SID, PASSWORD with the Database SYS password.

2) Oracle VM Manager Hosts: As root, access the Oracle VM Manager host, and stop and start the ovmm service:

# service ovmm stop && service ovmm start

3) Oracle VM Manager GUI: From the Oracle VM Manager Servers and VMs page, discover the Oracle VM Servers.

3) Oracle VM Manager GUI: From the Oracle VM Manager Servers and VMs page, discover the Oracle VM Servers.

Note: Up to Oracle VM Release 3.2.7 discover all the Oracle VM Servers. Oracle VM Release 3.2.8 only discover one Oracle VM Server.

4) Oracle VM Manager GUI: From the Oracle VM Manager Repositories page, refresh each storage repository, i.e. right click each repository, and click refresh.

5) Oracle VM Manager GUI: From the Servers and VMs page, rediscover each Oracle VM Server.

6) Oracle VM Manager GUI: From the Networking => Virtual NIC page, create new MAC addresses. Only the MAC addresses in use will be re-discovered.

4) Oracle VM Manager GUI: From the Oracle VM Manager Repositories page, refresh each storage repository, i.e. right click each repository, and click refresh.

5) Oracle VM Manager GUI: From the Servers and VMs page, rediscover each Oracle VM Server.

6) Oracle VM Manager GUI: From the Networking => Virtual NIC page, create new MAC addresses. Only the MAC addresses in use will be re-discovered.

4 COMMENTS:

Is there a typo or am I missing something. I am expecting

cat /etc/oracle/scls_scr/rhel5-test/root/crsstart

disable

for the last execution because you disable it but your post says it is

cat /etc/oracle/scls_scr/rhel5-test/root/crsstart

enable

Am I missing something ?

I'll check again...

But In my Test System, It's "enable" status in /etc/oracle/scls_scr/rhel5-test/root/crsstart file.

After test installation Grid Infrastructure for a Standalone Server (solaris)

$ crsctl check has

CRS-4638: Oracle High Availability Services is online

$ crsctl config has

CRS-4622: Oracle High Availability Services autostart is enabled.

$ cat /var/opt/oracle/scls_scr/sundb/root/crsstart

enable

$ cat /var/opt/oracle/scls_scr/sundb/oracle/ohasdstr

enable

$ crsctl disable has

CRS-4621: Oracle High Availability Services autostart is disabled.

$ cat /var/opt/oracle/scls_scr/sundb/root/crsstart

enable

$ cat /var/opt/oracle/scls_scr/sundb/oracle/ohasdstr

disable

$ crsctl enable has

CRS-4622: Oracle High Availability Services autostart is enabled.

$ cat /var/opt/oracle/scls_scr/sundb/root/crsstart

enable

$ cat /var/opt/oracle/scls_scr/sundb/oracle/ohasdstr

enable

That show... crsstart file not use anymore...

and if uses Grid Infrastructure for a Standalone Server "crsctl ... crs" can not use ...

$ crsctl disable crs

CRS-4013: This command is not supported in a single-node configuration.

CRS-4000: Command Disable failed, or completed with errors.